Robotic Process Automation (RPA) is able to simplify the automation of specific operations such as content updates, meta tag alterations, or website audits; nevertheless, it does not give a comprehensive solution for search engine optimization (SEO).

Meta descriptions that aren’t unique should not be used on more than one page of your website.

In HTML, a meta description tag holds a short overview of what’s on a page. They are often used by search engines like Google to make detailed text that shows up in search results.

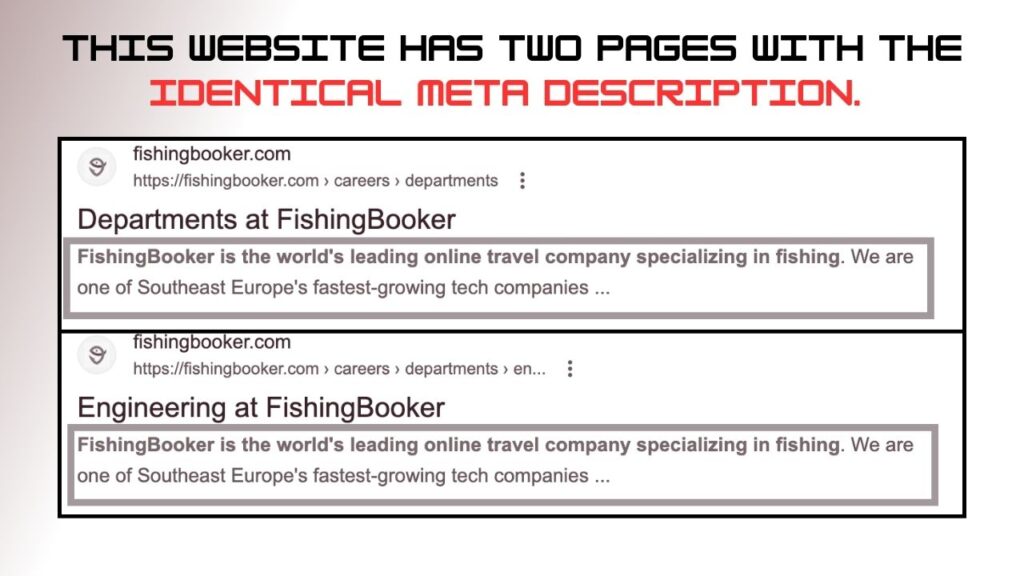

Let’s look at what it’s like to have duplicate meta descriptions.

This shows how the meta description of two pages on the same website can be the same.

But why is it a problem to have duplicate meta descriptions?

This is why having duplicate meta descriptions is a problem

When there are duplicate meta descriptions, they can hurt the user experience and the number of clicks from search engine results.

When meta descriptions for more than one page are the same, people might not be able to tell what each page is about.

That could make people who are interested in your website confused, so they might look for answers somewhere else, like on the websites of your rivals that rank on the same search engine results pages (SERPs).

Prioritize the Key Pages

If your site has a lot of similar meta descriptions, you should fix the most important ones first.

The most important pages to fix are the ones that have

- Very important to the business: like sales landing pages with high conversion rates or pages that have recently seen a drop in traffic

- High opportunity for search traffic: For instance, search engine results pages that are meant to target buzzwords with a lot of searches are getting a lot of views but not many clicks.

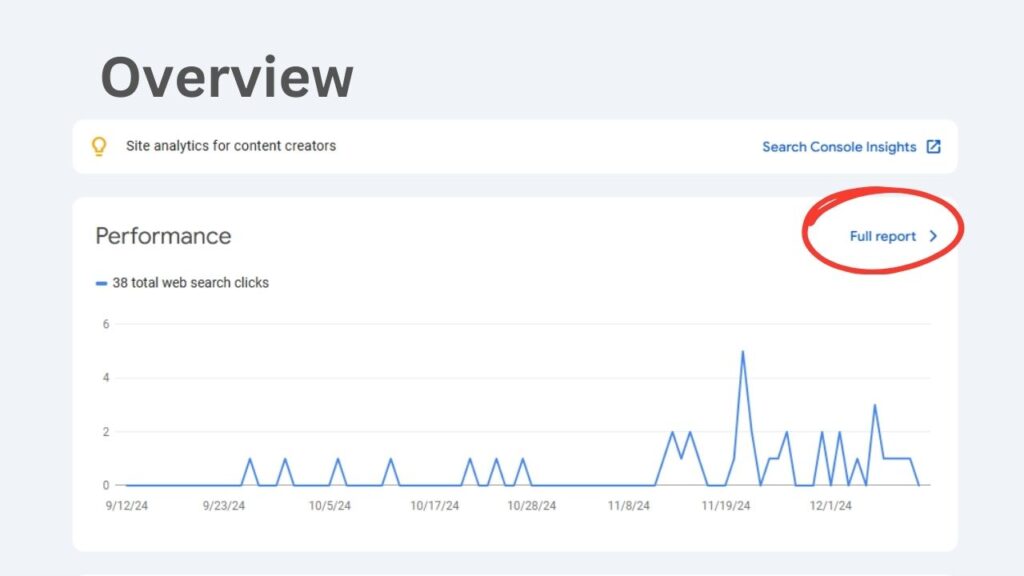

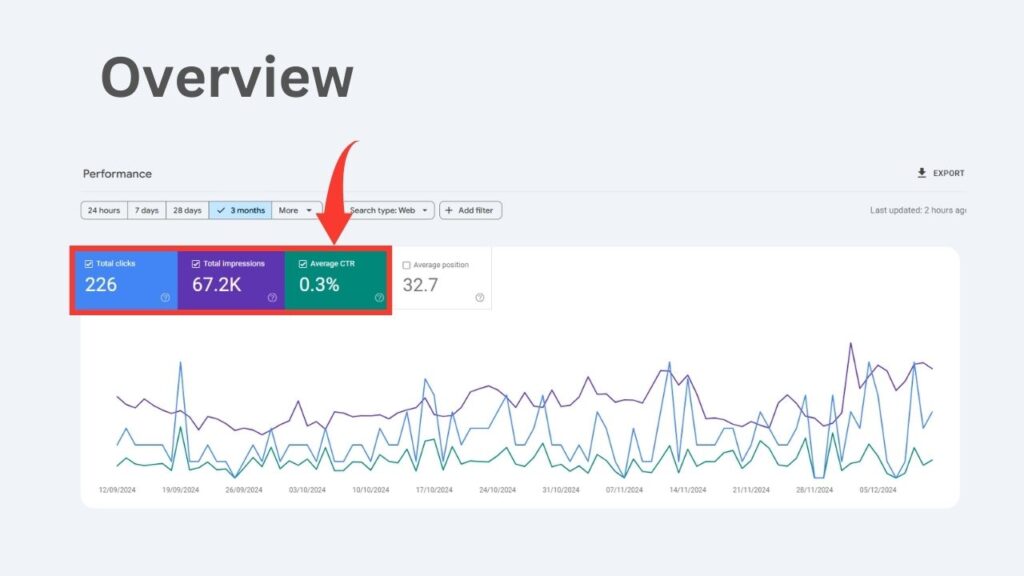

Let’s look at some ways to find pages that get a lot of natural views but not many hits.

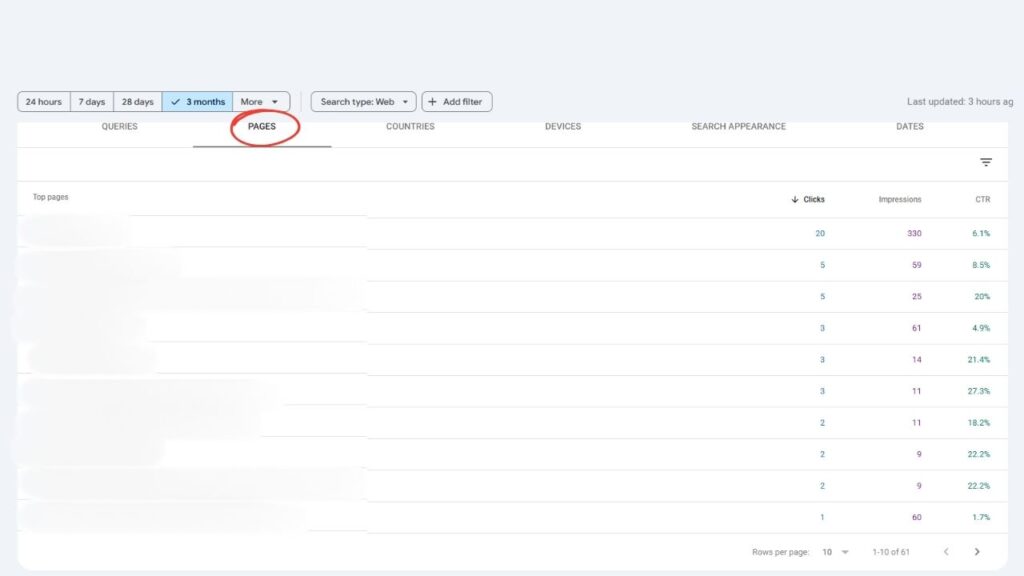

Click “Full report” in the “Performance” section.

To see all three measures at once, click “Total clicks,” “Total impressions,” and “Average CTR.”

Scroll down to “Pages.”

These pages come up a lot in search results. People don’t want to click on them, though.

You might be able to get more clicks and direct traffic to these pages by making the meta descriptions better.

That could hurt the image of your brand and lower the number of people who click through to your site.

Learn how to get rid of duplicate meta descriptions.

In four easy steps, let’s look at how to find and fix similar meta descriptions.

Look for pages with Meta descriptions that are the same, It is easy to find pages with duplicate meta descriptions and get an audit report from the Jainya services.

Let us check it out.

Visit the page and get in touch with our representative to get your website audit report as above.

If you have never used this tool before, read these steps on how to set up your first project.

Make meta descriptions that are unique and detailed

Write a different meta description for each page you chose.

In terms of meta descriptions, here are some of the best ways to write them:

Include the main term you want to rank for. That way, both people who read your page and search engines will know what to expect from it.

Fill in the search query. Tell people that they can find what they need on your page. You could start your meta description with “learn how to style crocs…” if someone is looking for “how to style crocs.”

Check to see if it fits with what’s on the page. Don’t talk about something that isn’t on the page in the meta description. Doing so will make people disappointed and cause more people to leave the page quickly.

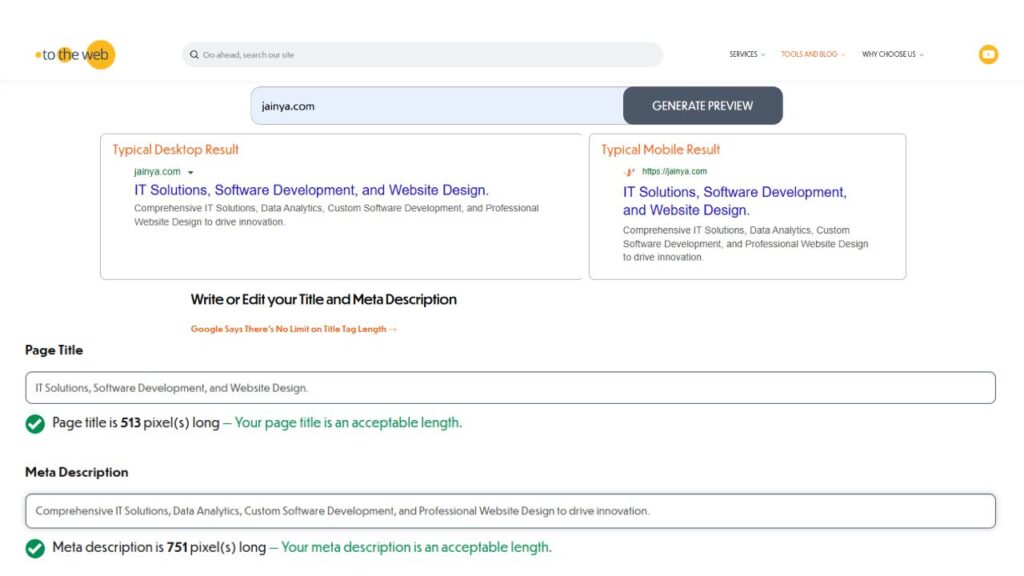

Do not go too long. The meta description can be 105 characters long and 680 pixels wide. It will show up on both desktop and mobile devices.

Start with the most important facts: This makes sure that the keywords show up even if Google cuts off some of the meta description.

Check your meta description with tools like To The Web’s to see how it will look in the SERPs.

Make changes to your website’s meta descriptions and keep an eye on them

When you make new meta descriptions, you should change them in the content management system (CMS) of your website.

Running the Site Audit check again after that will help you make sure that the meta descriptions were changed correctly.

The report shouldn’t have the pages you changed.

If the pages you changed still show up, make sure you did everything right.

Example of a Meta Description:

For a blog page about “SEO Tips for 2024-2025”:

<meta name="description" content="Discover the top SEO tips and strategies for 2024 to boost your website's rankings, traffic, and conversions. Learn more here.">For a product page selling “Smartphone Accessories”:

<meta name="description" content="Shop the best smartphone accessories, including cases, chargers, and screen protectors, at affordable prices. Free shipping available.">You can help search engines understand what a page is for by making each meta description unique to the page’s content. This is good for both SEO and the user experience.

Robotic Process Automation (RPA)

Robotic Process Automation (RPA) is a methodology that use software robots or “bots” to automate repetitive tasks, often within organizational processes. While RPA can automate tasks such as data extraction, form completion, and reporting, it is often not designed for functions like SEO optimization or the instant correction of duplicate meta descriptions.

Nevertheless, RPA can be used into a comprehensive workflow to aid in the detection and rectification of duplicate meta descriptions in the following ways:

How RPA Can Help with Duplicate Meta Descriptions:

Identification of Duplicate Meta Descriptions:

- The Robotic Process Automation (RPA) system can be configured to traverse a website, extract the meta descriptions from each page, and subsequently analyze them to detect occurrences of duplication.

- The RPA bot can generate a report or notify the user when duplicate meta descriptions are detected, hence minimizing the time allocated to manual verifications.

Automated Reporting and Logging:

- Once duplicates have been identified, RPA has the capability to document the findings in a structured format (for example, Excel or a database) and then notify webmasters or SEO teams so that they may take the appropriate next steps.

Basic Fixes for Meta Descriptions:

- Basic Modifications: If the objective is to revise meta descriptions to a more general format (for instance, utilizing a template), an RPA bot can efficiently update descriptions en masse by employing established rules or inputs.

- Data Extraction for Manual Updates: The RPA can extract content from pages when it identifies duplicate descriptions. This content may subsequently be manually revised or sent to content authors for modification.

However, RPA Alone is Not Enough for SEO Fixes

SEO-Specific Knowledge:

- In most cases, fixing multiple meta descriptions requires someone to understand what the page is about and how each description fits in with that. SEO experts often write or change meta descriptions to make sure they match what the page is about and what the user wants.

Dynamic Updates:

- Employing robotic process automation (RPA) to rectify numerous aspects would be inadequate if the content is always evolving, as seen in product catalogue pages or blog entries. Consequently, search engine optimization specialists should consistently evaluate the meta descriptions to guarantee their accuracy.

Example Workflow Using RPA for Duplicate Meta Descriptions

Crawl and Extract Meta Descriptions:

- The meta description element can be collected from each webpage of a site using RPA, which can navigate all the pages of the website.

Detect Duplicates:

- The bot may compare the descriptions to identify pages with identical or extremely comparable content.

Alert for Action:

- Following the detection of duplicate content, the bot has the capability to either issue a warning or send a report to either the administrator of the website or the SEO team respectively.

Manual Intervention:

- After that, SEO professionals are able to manually alter the meta descriptions, making certain that they are unique and relevant to the content of the page.

Optional Automated Bulk Update (If Rules Are Defined):

- The configuration of an RPA bot to generate new meta descriptions by making use of pre-existing templates or page content is something that can be done in certain circumstances. On the other hand, this is typically only appropriate to stuff that is either more straightforward or more general.

Conclusion

The identification and reporting of duplicate meta descriptions can be made easier with the use of RPA; nevertheless, manual involvement or the use of SEO tools are still required in order to effectively update and optimize these descriptions. Integration of robotic process automation (RPA) for efficiency and human knowledge for high-quality content is required for an effective search engine optimization approach.

Leave a Comment